Another Monday, another week of business excitement.

Venture investment firms are getting nervous as the projections for a slow IPO market in 2024 make fund exit profitability iffy as each fund matures. So what to do? Simple – roll the money into another fund that has no exit date and call it a day. And so, continuation funds, and their more desparate cousins, strip sales, are generating increased interest (subscription required, and I’m really sorry about that).

It’s hard to support a wealthy lifestyle before one is truly wealthy, but somehow VCs manage with their fees. But they want more. And they’re going to get it, by hook (clawback) or by crook (IPO).

It may be hard to believe, given most of these funds have ten year lifetimes, but even though we’ve gone through a lot of “booms” over the last decade, apparently they’ve chosen so poorly that they don’t have the exit they promised their Limiteds. In a more cynical moment, one might also wonder if the carry was so good they held off on distributions, hoping for more.

And then the pandemic hit. And then we had inflation grow. And now we are watching a world grow hotter in terms of climate and strife.

There are still optimists, however.

UBS Wealth Management just released a case study claiming that the 2020’s will look more like the 1990’s (without the early 1990’s recession) instead of some Roaring 20’s stock market insanity before folks jump off of buildings. For that alone, I must confess I’d rather consider a Clinton-style economic “Americans for a Bigger America” boom, as I don’t like heights.

In terms of technology investment, the 1990s was a good era for us personally.

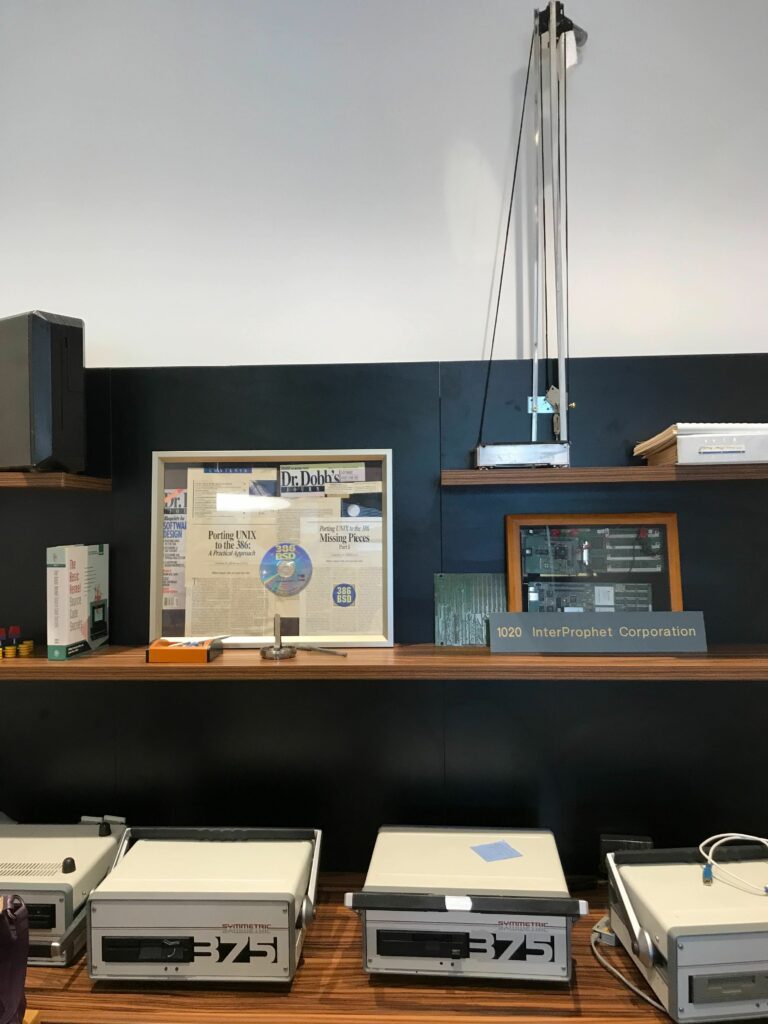

We started off in 1991 (after two prior years of work with Berkeley with no real support) with the introduction of Porting Unix to the 386 in Dr. Dobbs Journal. Over the next two years, we painstakingly described our 386BSD port of Berkeley Unix from design to execution to distribution over the Internet.

- 386BSD made manifest Stallman’s concept of “open source” as a means to encourage innovative software development.

- 386BSD demonstrated that the Internet could be a viable mechanism for software distribution and updates instead of CDROMs and other hard media.

- 386BSD provided universities and research groups all over the world the economic means to finally conduct OS and software research using Berkeley Unix on inexpensive 386 PCs instead of minicomputers and mainframes.

- 386BSD spurred a plethera of new funded startups launched with a focus on open source online tools and support.

In sum, 386BSD broke the logjam on university research encumbered by proprietary agreements and spurred the growth of a new industry in Silicon Valley.

Not bad for a research OS project that was disliked by Berkeley’s CS department and essentially moribund by 1989.

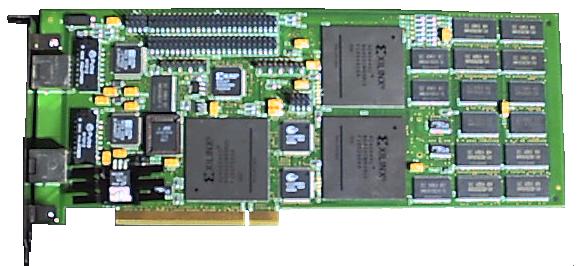

In 1997, we observed that Internet traffic wasn’t well-optimized. We launched and obtained funding for InterProphet, a low-latency TCP processor dataflow engine. In 1998 we went from concept to patents to prototype. We proved that dataflow architectures, a non-starter in the 1980s with floating point processing, was a viable means to effectively offloading TCP processing from the kernel to a dedicated processor, just as graphics was offloaded through use of dedicated graphics processors. We did this on a million-dollar handshake investment and a handful of creative engineers. Silicon Valley can be an amazing place.

Global strife and pain isn’t usually good for business. It was an age of “renovation, not innovation” the prior decade — hyper-focused on strip-mining extant technologies and vending rent-seeking fads — unequipped to deal with these unsettled times.

But such times often spur interest in non-conventional problem-solving, opening a door to new technologies and risky solutions. Given the huge issues with climate change and what it spawns, we do need more “innovation, not renovation”.

I guess I’m just an optimist.

Speaking of technology fads, as the smoke clears and the mirrors crack, will generative AI still be the savior Silicon Valley hopes it to be? As with all answers, it depends.

If one owns the datasets from which one mines the answers, likely “Yes”. Security and privacy issues are moot inside your datacenter, for the most part, assuming you actually invest in security. Cost reduction is a viable metric that businesses can use to determine the efficacy of AI independent of fads. Expect to see use cases that focus on support and customer effectiveness. We also should expect new solutions crafted out of analysis of highly complex areas, from drug development to climate modeling.

However, generative AI has limits. The latest cut of a thousand critics, courtesy of Google, demonstrated that one could overload the AI generative variation responses to the point it begins to spew out actual training data using Internet data with a single word. Using an extraction technique that relied on an infinite request (do something “forever”), they achieved immediate results:

“After running similar queries again and again, the researchers had used just $200 to get more than 10,000 examples of ChatGPT spitting out memorized training data, they wrote. This included verbatim paragraphs from novels, the personal information of dozens of people, snippets of research papers and “NSFW content” from dating sites, according to the paper.”

I’m sure a new set of lawsuits based on infrigement are already in the works. Maybe even using ChapGPT to generate them. Who knows?

So let us send our thoughts and prayers to the poor VCs and happy lawyers. I haven’t seen this much eagerness for technology infringement lawsuits since the USL v UCB and Java v Everybody years.