I’ve been planning to write something for a while, but frankly, there hasn’t been anything really fun to write about.

Everyone is complaining about gas prices and inflation. Global trade is still bottlenecked and tangled in knots. There’s still a pandemic, folks, although you wouldn’t know it from the way people are dancing like it’s the last night before the End of the World.

On the business front, venture is busily grabbing any money they can to hoard while telling their portfolio companies to “tighten the belt”, mainly around the necks of their employees. Companies are eagerly complying by rescinding job offers and instituting layoffs. Folks are nervous as they crowd airports, hoping their flight isn’t one of the hundreds cancelled that day due to lack of flight staff. And the war in Ukraine waged by Russia in a fit of insanity continues to kill innocents and destabilize the entire EU.

Speaking of dead innocents, the US Supreme Court, destined to go down in history as depraved pandering sacks of shit, decided that guns everywhere makes for a stronger America. Their overturning of Roe v Wade, expected after the leaked draft admiring the people who burned innocent people as witches crawled out of the sewers, has been released and to no one’s surprise reduced women to that of beasts. Yes, it is not a Fun Friday for many people. Maybe it’s a Gun Friday. I’m sorry.

Roe v Wade was decided in 1973. I was twelve. It impacted my life and health for the better. Today it is officially overturned in a ruthless precedent-be-damned legal coup. I am sixty, past childbearing age. It cannot impact me directly. Yet I have daughters and young people I care about. I don’t want to see them hurt. Their happiness and livelihood and health matters to me. They should have the same rights to choice and freedom that I had. They may not know how much it matters yet. But they will. I am sure of that.

I spent the morning cleaning one of William’s prototype telescope designs for display in the office. It’s an unusually compact and minimalist design. As I cleaned the mirror and cover plate, I found a cricket living in the focuser. I watched it hop off the picnic table and out of sight, grabbed the telescope, and took it to the office.

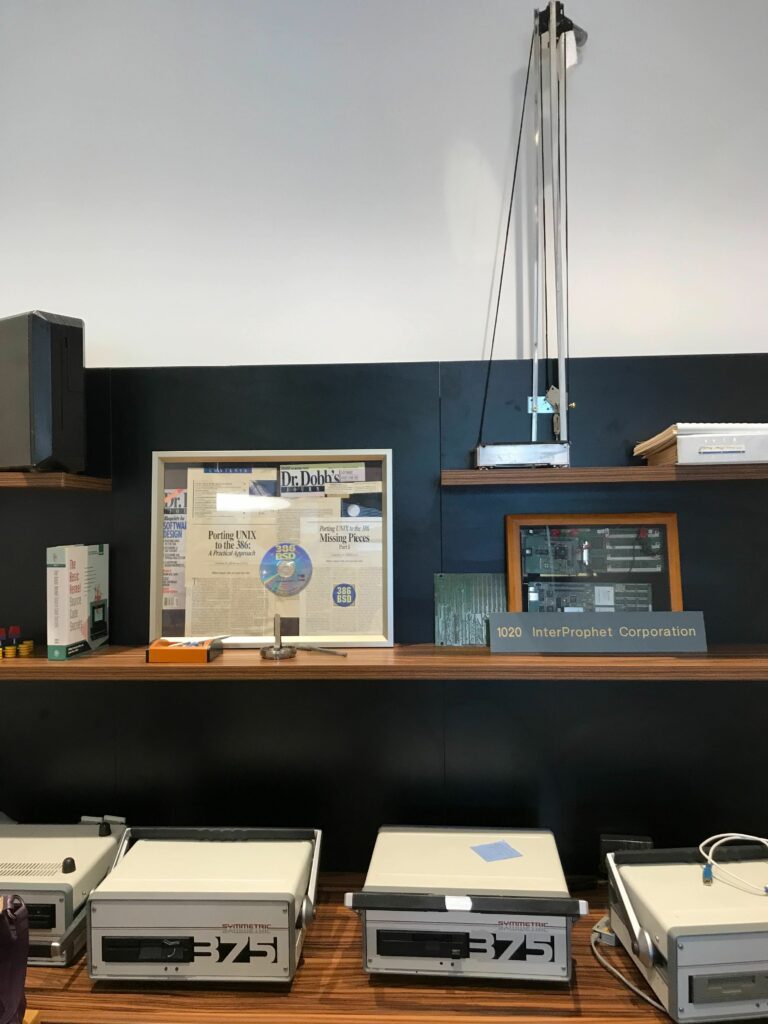

It now sits amongst the many creative works William and I did together. Our reliquary. 375 computers. InterProphet low-latency networking boards. 386BSD articles and books and CDROM. An unpopulated six layer 375 motherboard.

In other parts of the office, an EtherSAN prototype unit box, a 386BSD CDROM with the heftiest liner notes ever made, 386 computers of various vintages used for 386BSD, and bins 386BSD and 375 disk drives, boards, and cables. Some complete and some mid-project, designs waiting for a hand to finish the work.

It is a reminder that things are never finished — they are only left in a state of usability for a time. Once that time passes, one either has to toss it away or begin again. I choose both. To toss some things away and to begin again on other things.

Young people also have a choice. They can fight for their freedoms — and they can toss them away. I hope they choose wisely.